Think about {that a} robotic helps you clear the dishes. You ask it to seize a soapy bowl out of the sink, however its gripper barely misses the mark.

Utilizing a brand new framework developed by MIT and NVIDIA researchers, you could possibly appropriate that robotic’s conduct with easy interactions. The strategy would permit you to level to the bowl or hint a trajectory to it on a display screen, or just give the robotic’s arm a nudge in the proper course.

Not like different strategies for correcting robotic conduct, this system doesn’t require customers to gather new knowledge and retrain the machine-learning mannequin that powers the robotic’s mind. It allows a robotic to make use of intuitive, real-time human suggestions to decide on a possible motion sequence that will get as shut as potential to satisfying the person’s intent.

When the researchers examined their framework, its success price was 21 % greater than an alternate technique that didn’t leverage human interventions.

In the long term, this framework might allow a person to extra simply information a factory-trained robotic to carry out all kinds of family duties although the robotic has by no means seen their dwelling or the objects in it.

“We will’t count on laypeople to carry out knowledge assortment and fine-tune a neural community mannequin. The buyer will count on the robotic to work proper out of the field, and if it doesn’t, they’d need an intuitive mechanism to customise it. That’s the problem we tackled on this work,” says Felix Yanwei Wang, {an electrical} engineering and laptop science (EECS) graduate scholar and lead writer of a paper on this technique.

His co-authors embrace Lirui Wang PhD ’24 and Yilun Du PhD ’24; senior writer Julie Shah, an MIT professor of aeronautics and astronautics and the director of the Interactive Robotics Group within the Laptop Science and Synthetic Intelligence Laboratory (CSAIL); in addition to Balakumar Sundaralingam, Xuning Yang, Yu-Wei Chao, Claudia Perez-D’Arpino PhD ’19, and Dieter Fox of NVIDIA. The analysis will probably be introduced on the Worldwide Convention on Robots and Automation.

Mitigating misalignment

Just lately, researchers have begun utilizing pre-trained generative AI fashions to study a “coverage,” or a algorithm, {that a} robotic follows to finish an motion. Generative fashions can remedy a number of advanced duties.

Throughout coaching, the mannequin solely sees possible robotic motions, so it learns to generate legitimate trajectories for the robotic to comply with.

Whereas these trajectories are legitimate, that doesn’t imply they all the time align with a person’s intent in the true world. The robotic may need been skilled to seize packing containers off a shelf with out knocking them over, nevertheless it might fail to achieve the field on prime of somebody’s bookshelf if the shelf is oriented otherwise than these it noticed in coaching.

To beat these failures, engineers sometimes gather knowledge demonstrating the brand new process and re-train the generative mannequin, a expensive and time-consuming course of that requires machine-learning experience.

As an alternative, the MIT researchers needed to permit customers to steer the robotic’s conduct throughout deployment when it makes a mistake.

But when a human interacts with the robotic to appropriate its conduct, that might inadvertently trigger the generative mannequin to decide on an invalid motion. It’d attain the field the person needs, however knock books off the shelf within the course of.

“We wish to enable the person to work together with the robotic with out introducing these sorts of errors, so we get a conduct that’s way more aligned with person intent throughout deployment, however that can be legitimate and possible,” Wang says.

Their framework accomplishes this by offering the person with three intuitive methods to appropriate the robotic’s conduct, every of which gives sure benefits.

First, the person can level to the item they need the robotic to control in an interface that reveals its digicam view. Second, they will hint a trajectory in that interface, permitting them to specify how they need the robotic to achieve the item. Third, they will bodily transfer the robotic’s arm within the course they need it to comply with.

“When you’re mapping a 2D picture of the atmosphere to actions in a 3D house, some info is misplaced. Bodily nudging the robotic is probably the most direct option to specifying person intent with out dropping any of the knowledge,” says Wang.

Sampling for fulfillment

To make sure these interactions don’t trigger the robotic to decide on an invalid motion, comparable to colliding with different objects, the researchers use a particular sampling process. This method lets the mannequin select an motion from the set of legitimate actions that almost all carefully aligns with the person’s purpose.

“Relatively than simply imposing the person’s will, we give the robotic an thought of what the person intends however let the sampling process oscillate round its personal set of discovered behaviors,” Wang explains.

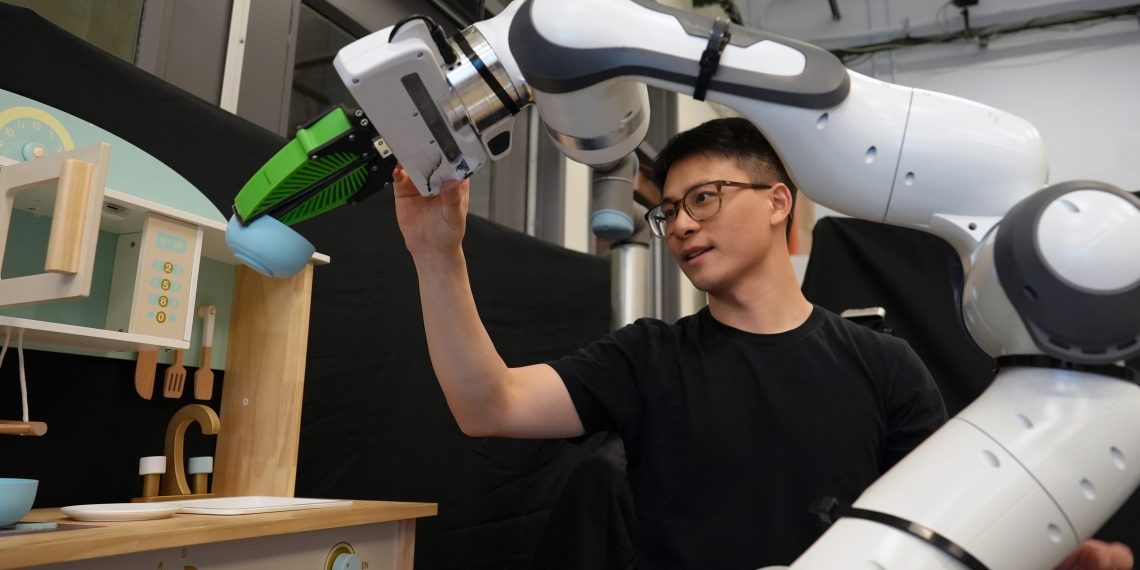

This sampling technique enabled the researchers’ framework to outperform the opposite strategies they in contrast it to throughout simulations and experiments with an actual robotic arm in a toy kitchen.

Whereas their technique may not all the time full the duty immediately, it gives customers the benefit of with the ability to instantly appropriate the robotic in the event that they see it doing one thing incorrect, quite than ready for it to complete after which giving it new directions.

Furthermore, after a person nudges the robotic a number of occasions till it picks up the right bowl, it might log that corrective motion and incorporate it into its conduct by future coaching. Then, the subsequent day, the robotic might decide up the right bowl while not having a nudge.

“However the important thing to that steady enchancment is having a approach for the person to work together with the robotic, which is what we have now proven right here,” Wang says.

Sooner or later, the researchers wish to enhance the pace of the sampling process whereas sustaining or bettering its efficiency. Additionally they wish to experiment with robotic coverage era in novel environments.

Think about {that a} robotic helps you clear the dishes. You ask it to seize a soapy bowl out of the sink, however its gripper barely misses the mark.

Utilizing a brand new framework developed by MIT and NVIDIA researchers, you could possibly appropriate that robotic’s conduct with easy interactions. The strategy would permit you to level to the bowl or hint a trajectory to it on a display screen, or just give the robotic’s arm a nudge in the proper course.

Not like different strategies for correcting robotic conduct, this system doesn’t require customers to gather new knowledge and retrain the machine-learning mannequin that powers the robotic’s mind. It allows a robotic to make use of intuitive, real-time human suggestions to decide on a possible motion sequence that will get as shut as potential to satisfying the person’s intent.

When the researchers examined their framework, its success price was 21 % greater than an alternate technique that didn’t leverage human interventions.

In the long term, this framework might allow a person to extra simply information a factory-trained robotic to carry out all kinds of family duties although the robotic has by no means seen their dwelling or the objects in it.

“We will’t count on laypeople to carry out knowledge assortment and fine-tune a neural community mannequin. The buyer will count on the robotic to work proper out of the field, and if it doesn’t, they’d need an intuitive mechanism to customise it. That’s the problem we tackled on this work,” says Felix Yanwei Wang, {an electrical} engineering and laptop science (EECS) graduate scholar and lead writer of a paper on this technique.

His co-authors embrace Lirui Wang PhD ’24 and Yilun Du PhD ’24; senior writer Julie Shah, an MIT professor of aeronautics and astronautics and the director of the Interactive Robotics Group within the Laptop Science and Synthetic Intelligence Laboratory (CSAIL); in addition to Balakumar Sundaralingam, Xuning Yang, Yu-Wei Chao, Claudia Perez-D’Arpino PhD ’19, and Dieter Fox of NVIDIA. The analysis will probably be introduced on the Worldwide Convention on Robots and Automation.

Mitigating misalignment

Just lately, researchers have begun utilizing pre-trained generative AI fashions to study a “coverage,” or a algorithm, {that a} robotic follows to finish an motion. Generative fashions can remedy a number of advanced duties.

Throughout coaching, the mannequin solely sees possible robotic motions, so it learns to generate legitimate trajectories for the robotic to comply with.

Whereas these trajectories are legitimate, that doesn’t imply they all the time align with a person’s intent in the true world. The robotic may need been skilled to seize packing containers off a shelf with out knocking them over, nevertheless it might fail to achieve the field on prime of somebody’s bookshelf if the shelf is oriented otherwise than these it noticed in coaching.

To beat these failures, engineers sometimes gather knowledge demonstrating the brand new process and re-train the generative mannequin, a expensive and time-consuming course of that requires machine-learning experience.

As an alternative, the MIT researchers needed to permit customers to steer the robotic’s conduct throughout deployment when it makes a mistake.

But when a human interacts with the robotic to appropriate its conduct, that might inadvertently trigger the generative mannequin to decide on an invalid motion. It’d attain the field the person needs, however knock books off the shelf within the course of.

“We wish to enable the person to work together with the robotic with out introducing these sorts of errors, so we get a conduct that’s way more aligned with person intent throughout deployment, however that can be legitimate and possible,” Wang says.

Their framework accomplishes this by offering the person with three intuitive methods to appropriate the robotic’s conduct, every of which gives sure benefits.

First, the person can level to the item they need the robotic to control in an interface that reveals its digicam view. Second, they will hint a trajectory in that interface, permitting them to specify how they need the robotic to achieve the item. Third, they will bodily transfer the robotic’s arm within the course they need it to comply with.

“When you’re mapping a 2D picture of the atmosphere to actions in a 3D house, some info is misplaced. Bodily nudging the robotic is probably the most direct option to specifying person intent with out dropping any of the knowledge,” says Wang.

Sampling for fulfillment

To make sure these interactions don’t trigger the robotic to decide on an invalid motion, comparable to colliding with different objects, the researchers use a particular sampling process. This method lets the mannequin select an motion from the set of legitimate actions that almost all carefully aligns with the person’s purpose.

“Relatively than simply imposing the person’s will, we give the robotic an thought of what the person intends however let the sampling process oscillate round its personal set of discovered behaviors,” Wang explains.

This sampling technique enabled the researchers’ framework to outperform the opposite strategies they in contrast it to throughout simulations and experiments with an actual robotic arm in a toy kitchen.

Whereas their technique may not all the time full the duty immediately, it gives customers the benefit of with the ability to instantly appropriate the robotic in the event that they see it doing one thing incorrect, quite than ready for it to complete after which giving it new directions.

Furthermore, after a person nudges the robotic a number of occasions till it picks up the right bowl, it might log that corrective motion and incorporate it into its conduct by future coaching. Then, the subsequent day, the robotic might decide up the right bowl while not having a nudge.

“However the important thing to that steady enchancment is having a approach for the person to work together with the robotic, which is what we have now proven right here,” Wang says.

Sooner or later, the researchers wish to enhance the pace of the sampling process whereas sustaining or bettering its efficiency. Additionally they wish to experiment with robotic coverage era in novel environments.